AI: The Inflection Point of Our Generation

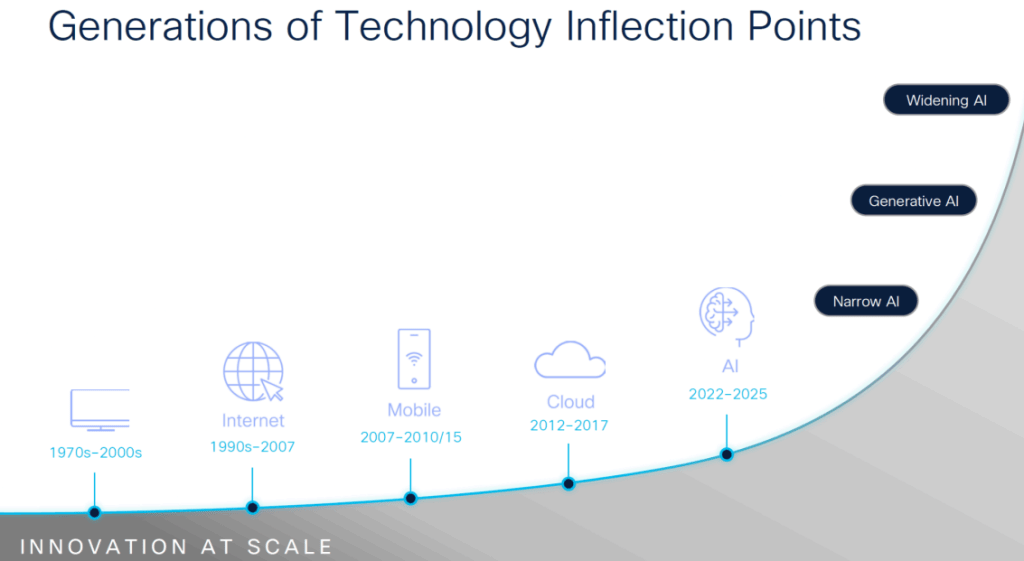

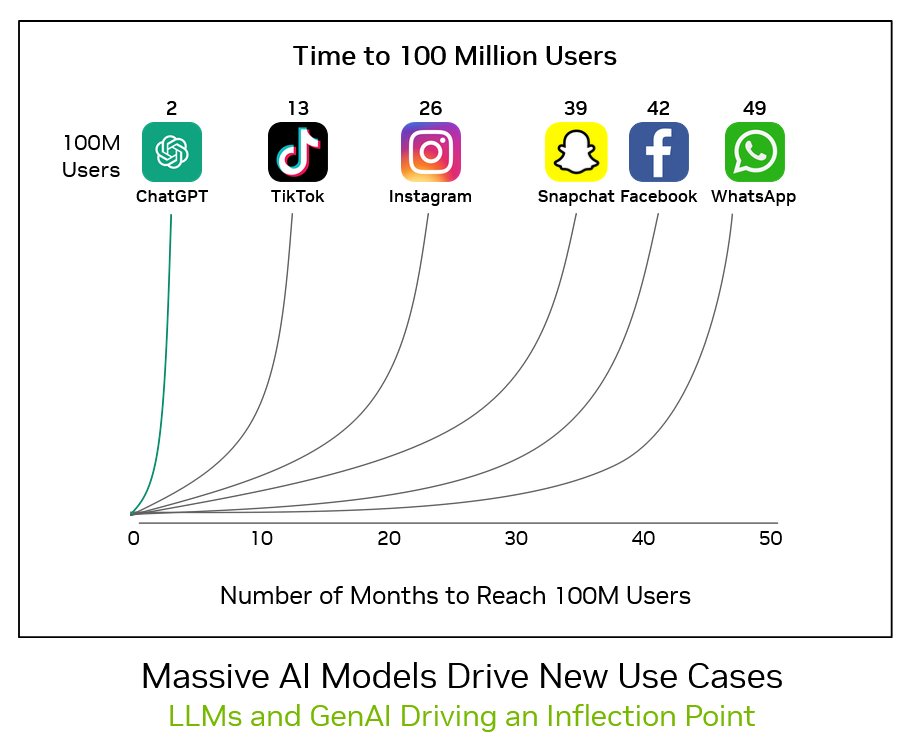

Industry giants such as Cisco Systems and Nvidia herald a pivotal moment in technology, driven by advancements in AI and Generative AI (GenAI). Jensen Huang, founder and CEO of NVIDIA, encapsulates this shift perfectly: “AI is at an inflection point, setting up for broad adoption reaching into every industry. From startups to major enterprises, we are witnessing an accelerated interest in the versatility and capabilities of generative AI.” This transformation is reminiscent of previous technological revolutions, computers, the internet, and mobile technologies but at an unprecedented speed, with technology accelerating the pace of innovation.

Despite the deceleration of Moore’s Law (the number of transistors on a silicon chip doubles approximately every two years), GPU technology is surging. According to Huang, GPU performance has increased by 1000-fold from 2016 to 2024 (Inference performance improvements), and this exponential growth shows no signs of abating.

As companies funnel substantial investments into AI, breakthroughs are anticipated across various sectors, including healthcare, autonomous vehicles, cybersecurity, and augmented/virtual reality. We are on the brink of a new era where disruption unfolds before our very eyes.

Understanding the Basics

To grasp AI effectively, it’s crucial to start with the fundamentals. For many readers, the abundance of acronyms and technical jargon can be overwhelming. At its core, AI is driven by data—without it, AI as we know it would not exist.

AI has evolved significantly, from its nascent stages in the 1950s, with early programs written for the Ferranti Mark 1 machine at the University of Manchester, to the mid-2020s, where ChatGPT (2022) exemplifies the power of Generative AI (GenAI) through Large Language Models (LLMs). These models enable near-human-like conversations on a wide range of topics.

Defining AI and Its Subfields

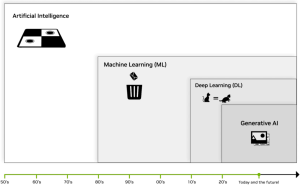

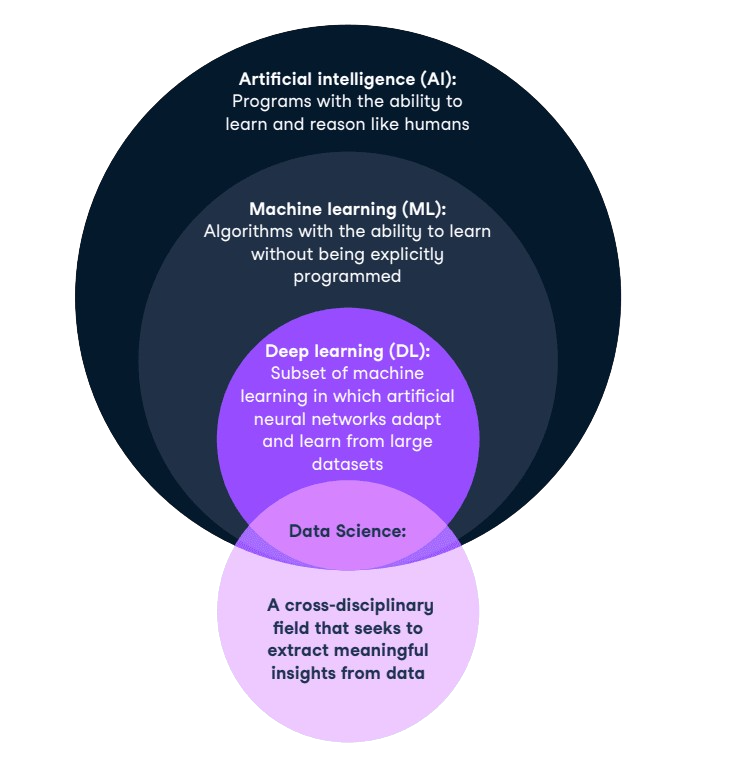

Artificial Intelligence (AI) is a branch of computer science focused on developing intelligent agents capable of performing tasks that typically require human intelligence, such as problem-solving, speech recognition, and decision-making.

AI encompasses several subfields, including:

- Machine Learning (ML): A subset of AI that creates algorithms allowing computers to learn from data and improve over time without explicit programming. ML is instrumental in applications like image recognition, natural language processing, recommendation systems, and fraud detection.

- Deep Learning (DL): A specialized branch of ML that uses neural networks to process vast amounts of data and learn hierarchical representations. Deep Learning (DL) employs neural networks modeled after the human brain, learning hierarchical data representations through interconnected layers of neurons. DL excels in complex fields like image and speech recognition.

- Generative AI (GenAI): A subset of Deep Learning, GenAI focuses on creating models that generate new content resembling existing data. GenAI can produce realistic images, text, or music, with notable examples including Google’s BARD and OpenAI’s ChatGPT.

The Impact of Large Language Models (LLMs)

To grasp the scale of modern Large Language Models (LLMs), consider Meta’s latest release, LLaMA 3, unveiled on April 18, 2024. This model comes in three sizes, including 8 billion, 70 billion, and a staggering 405 billion parameters. LLaMA 3 was pre-trained on about 15 trillion tokens sourced from publicly available data, with fine-tuning on over 10 million human-annotated examples. The estimated cost for this immense undertaking was over $720 million in GPU hardware alone. Meta used 24,000 of Nvidia’s H100 chips, each costing around $30,000. Remarkably, despite the massive resources required to create it, LLaMA 3 is open-source and freely available for anyone to download and use, including in private AI environments. Platforms like Huggingface.co offer access to this and many other open-source LLMs, providing a valuable resource for AI developers.

Generative AI Use Cases

Generative AI is transforming various industries by enabling the creation of new content and enhancing existing processes. Its applications span across numerous fields, demonstrating its versatility and impact. Here are some notable use cases:

- Media/Video Streaming: Generative AI enhances media and video streaming services by optimizing content delivery networks (CDNs), improving video quality through advanced compression algorithms, and enabling personalized content recommendations based on user preferences and behavior. This results in a more engaging and customized viewing experience for users.

- Cloud Gaming: In cloud gaming, AI improves user experiences by predicting player actions, reducing latency, and dynamically adjusting game parameters in real-time. This ensures smooth gameplay, reduces lag, and creates immersive environments, enhancing overall player satisfaction.

- Animation and Modeling: Generative AI automates repetitive tasks in animation and modeling, generates complex simulations, and optimizes rendering processes. This accelerates production timelines, improves visual quality, and allows for more intricate and realistic animations and models.

- Design & Visualization: AI tools streamline the design process by automating design iterations, generating complex designs based on input criteria, and visualizing concepts in virtual environments. This facilitates rapid prototyping, enhances design efficiency, and supports creative exploration.

- 3D Rendering: Generative AI accelerates 3D rendering processes by optimizing scene composition, enhancing lighting effects, and reducing rendering times. This technology enables the faster production of high-quality visual content for movies, video games, and architectural visualization.

- Diagnostic Imaging: In healthcare, generative AI assists in analyzing diagnostic images, detecting anomalies, and improving diagnostic accuracy and efficiency. AI algorithms can identify patterns and abnormalities that might be missed by the human eye, leading to better patient outcomes and more efficient healthcare delivery.

- Drug Discovery and Development: AI models accelerate drug discovery by predicting how different compounds interact with biological targets. This enables researchers to identify potential drug candidates more quickly, reducing the time and cost associated with traditional drug development processes.

- Customer Service Automation: Generative AI enhances customer service by automating responses to common inquiries, providing personalized support, and improving interaction quality. AI-driven chatbots and virtual assistants can handle a wide range of customer service tasks, leading to more efficient and satisfying customer interactions.

- Content Creation: In journalism and content creation, AI can generate articles, reports, and creative writing based on prompts or data inputs. This technology can assist writers by providing suggestions, generating drafts, and even creating complete pieces of content, streamlining the content creation process.

- Financial Forecasting: AI models analyze financial data to predict market trends, assess investment risks, and optimize trading strategies. By processing large volumes of financial information, generative AI helps investors and financial analysts make more informed decisions and improve portfolio management.

- Personalized Marketing: Generative AI enables personalized marketing strategies by analyzing consumer behavior and preferences. It can generate targeted advertising content, create customized offers, and optimize marketing campaigns, leading to higher engagement and conversion rates.

The Market for AI

Artificial Intelligence (AI) is not just a buzzword; it’s a revolutionary force transforming industries across the globe. According to Bloomberg Intelligence, the generative AI market is expected to skyrocket from $40 billion in 2022 to an astounding $1.3 trillion by 2032. This explosive growth, reflecting a compound annual growth rate (CAGR) of 42%, underscores the immense potential and widespread adoption of AI technologies.

Key Drivers of AI Market Growth:

- Consumer Generative AI: The surge in consumer generative AI programs, such as Google’s Bard and OpenAI’s ChatGPT, is driving substantial market expansion. These tools are enhancing user interactions and creating new opportunities for personalized experiences.

- Technological Advancements: Rapid advancements in AI infrastructure, including training and inference devices, are enabling more efficient and powerful AI solutions. This technological evolution is critical for handling the increasing complexity and scale of AI models.

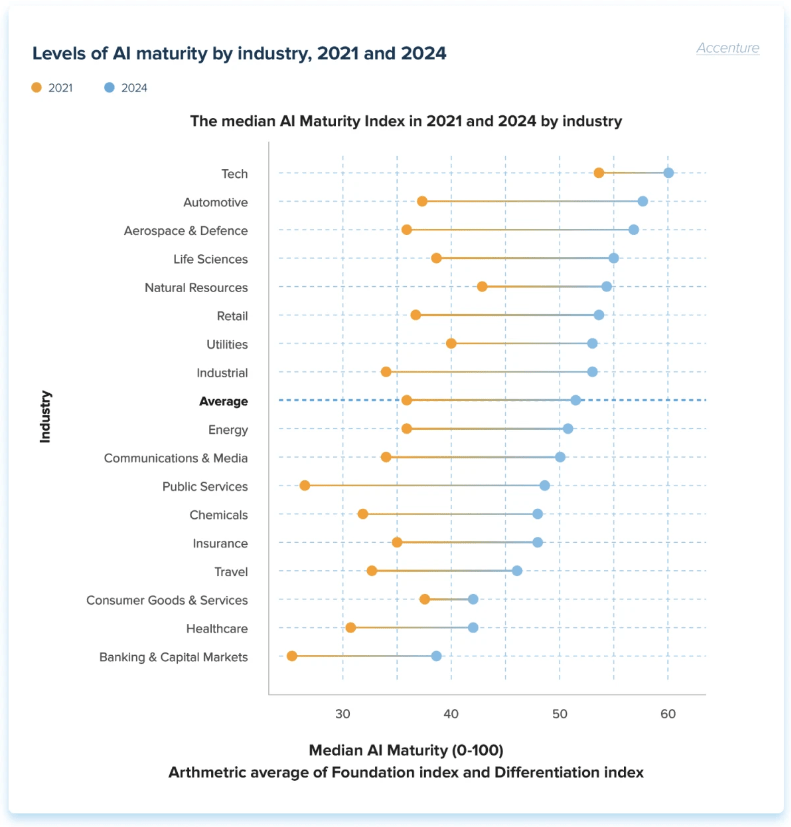

- Industry Adoption: Major sectors such as automotive, healthcare, banking, and manufacturing are leading the charge in AI adoption. For example, the automotive industry is leveraging AI for autonomous driving technologies, while healthcare is utilizing AI for diagnostic imaging and personalized medicine.

- Regional Insights: North America remains the dominant region in the AI market, driven by tech giants like Facebook, Amazon, Google, IBM, Microsoft, and Apple. These companies are making significant investments in AI research and development, solidifying their leadership in the global market.

Challenges and Opportunities:

- Talent Shortages: The demand for skilled professionals in AI-related fields, such as machine learning, data science, and software engineering, is outpacing supply. This shortage poses a challenge to the rapid development and deployment of AI technologies.

- Regulatory and Privacy Concerns: As AI technologies become more integrated into various applications, issues related to data privacy, security, and regulatory compliance are increasingly important. Addressing these concerns is crucial for maintaining public trust and ensuring the responsible use of AI.

For a detailed analysis of AI market trends, refer to the Bloomberg Intelligence report.

Hardware Performance Analysis

When investing in AI hardware, understanding the performance characteristics of different components is crucial. The primary focus for AI workloads is often on Graphics Processing Units (GPUs) and their interaction with the system’s PCI Express (PCIe) interface.

PCIe Generations and Their Impact:

- PCIe 3.0: Introduced in 2010, PCIe 3.0 offers a maximum bandwidth of 1 GB/s per lane and 16 GB/s for a x16 slot. This generation marked a significant improvement over PCIe 2.0, supporting more demanding applications with higher performance requirements.

- PCIe 4.0: Launched in 2017, PCIe 4.0 doubled the bandwidth per lane to 2 GB/s, resulting in a total of 32 GB/s for a x16 slot. This generation provided enhanced support for high-performance graphics cards and storage solutions.

- PCIe 5.0: Released in 2019, PCIe 5.0 further doubled the bandwidth to 4 GB/s per lane, reaching a total of 64 GB/s for a x16 slot. This significant increase in bandwidth caters to emerging data-intensive applications, including advanced AI and machine learning tasks.

Real-World Performance Considerations:

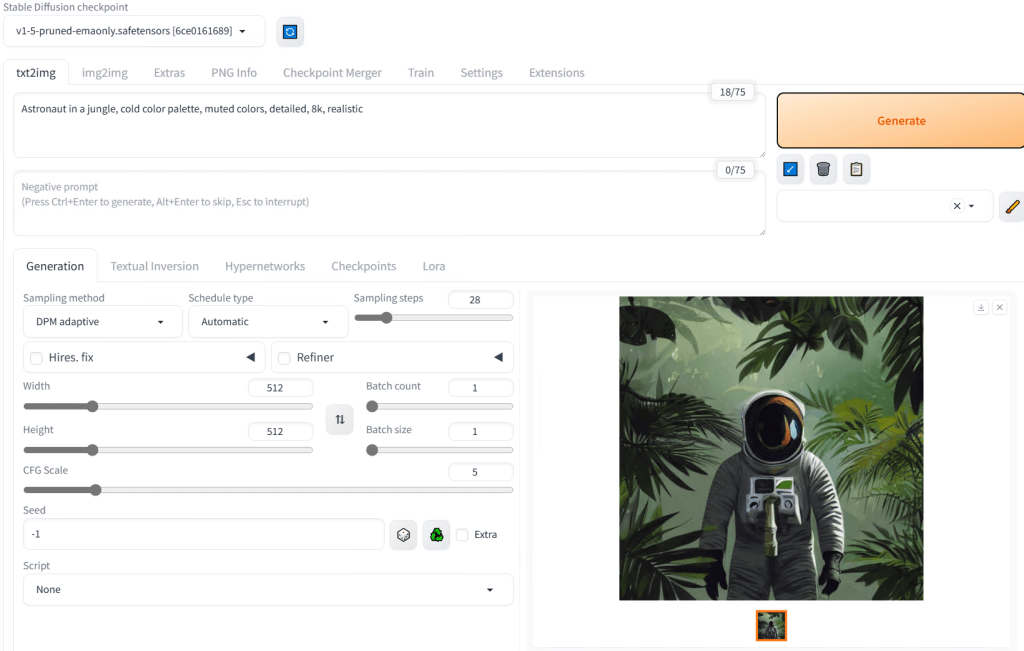

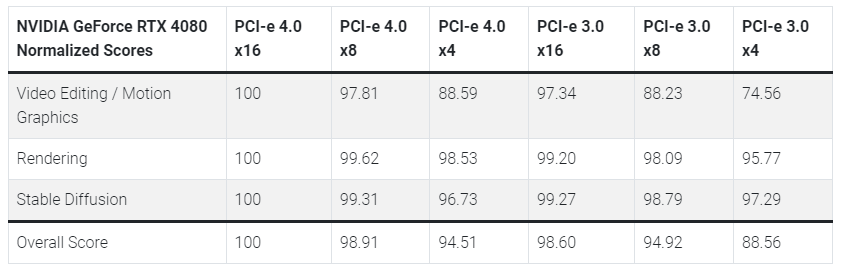

Despite the theoretical performance gains with newer PCIe generations, real-world benchmarks often reveal more nuanced results. For instance, in a study by Puget Systems, the performance difference between PCIe 4.0 x16 and PCIe 4.0 x8 configurations for Stable Diffusion tasks was minimal, suggesting that the actual performance benefits may not always align with theoretical expectations.

Practical Implications:

- Cost vs. Performance: Newer PCIe generations often come with higher price tags. Understanding the real-world performance gains can help in making more cost-effective decisions. For many AI applications, investing in the latest generation may not always provide a proportional benefit.

- Future-Proofing: While investing in cutting-edge hardware can offer advantages, it’s essential to balance this with the specific needs and budget of the application. Choosing hardware that meets current requirements while allowing for future upgrades can be a prudent strategy.

For further insights into PCIe performance and its impact on AI workloads, explore the detailed analysis by Puget Systems.

The Rise of Private AI

In the era of rapid AI advancement, the rise of private AI environments represents a critical shift in how organizations handle and secure their data. As generative AI technologies proliferate, organizations face increasing challenges regarding data security, privacy, and compliance. This shift towards private AI highlights the growing importance of secure, controlled environments for handling sensitive information and ensuring regulatory compliance.

Key Factors Driving the Rise of Private AI:

- Data Privacy and Security: The foremost reason for adopting private AI solutions is the need for enhanced data privacy and security. Public cloud services, while convenient, often pose risks related to data breaches and unauthorized access. Private AI environments offer a more secure alternative, allowing organizations to retain complete control over their data and infrastructure. This control is crucial for industries handling sensitive information, such as healthcare, finance, and government sectors.

- Regulatory Compliance: With stringent regulations like the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in the United States, organizations must ensure that their data practices comply with legal standards. Private AI setups enable organizations to implement tailored security measures and maintain compliance with these regulations, reducing the risk of costly fines and legal issues.

- Cost Considerations: While private AI environments can be more expensive to set up and maintain compared to public cloud services, they offer long-term cost benefits for organizations with significant data handling requirements. The ability to avoid recurring cloud service fees and customize infrastructure to specific needs can result in more predictable and manageable costs over time.

- Performance and Customization: Private AI environments allow for greater customization of hardware and software configurations, optimizing performance for specific use cases. This flexibility ensures that AI models and applications run efficiently, without the limitations imposed by shared public cloud resources. For example, organizations can deploy high-performance GPUs and tailored storage solutions to meet the demands of complex AI tasks

The Future of Private AI:

The demand for private AI environments is expected to grow as organizations increasingly recognize the importance of data security and compliance. According to Foundry Magazine, the shift towards private cloud solutions is driven by the need for robust security and privacy assurances that public cloud providers may struggle to deliver. As AI technologies continue to evolve, the capabilities of private AI environments will advance, offering even more powerful and secure solutions for organizations.

Decentralization - Capturing Unused Supply

The Ethereum Merge in late 2022, which marked the network’s transition from Proof of Work (PoW) to Proof of Stake (PoS), was a pivotal moment in the crypto industry. While it significantly reduced Ethereum’s energy consumption and environmental impact, it also had profound consequences for crypto miners. The shift to PoS rendered traditional mining equipment obsolete for Ethereum, leading to a sudden surplus of unused computational power. This left miners scrambling for alternative uses for their hardware, creating a unique opportunity for decentralized platforms to capture this idle supply

This surplus of computational resources represented both a challenge and an opportunity. On one hand, miners faced the prospect of sunk costs in expensive hardware that could no longer generate revenue. On the other hand, this surplus created an opportunity for new applications and projects that could harness this idle compute power in innovative ways.

The Emergence of Decentralized Compute Platforms

Several blockchain-based platforms quickly recognized this opportunity and began to offer decentralized alternatives to traditional cloud computing services. These platforms allow former miners to repurpose their hardware, providing valuable computational resources to a variety of projects and applications. By leveraging blockchain technology, these platforms can offer compute power at a fraction of the cost of centralized cloud providers, while also benefiting from the security, transparency, and resilience of decentralized networks.

Flux: Flux is a decentralized cloud infrastructure that allows users to deploy and manage applications across a decentralized network of nodes. By providing a platform where anyone can contribute their unused compute power, Flux offers a cost-effective and scalable alternative to traditional cloud services. This decentralized approach not only reduces costs but also enhances security and reliability by eliminating single points of failure.

Akash Network: Akash is a decentralized cloud marketplace that connects users with providers offering unused compute resources. It allows developers to deploy applications on a decentralized network, with the flexibility to choose from a wide range of compute options. Akash leverages blockchain to automate the deployment process and ensure transparent, secure, and efficient transactions between users and providers.

Octa: Octa operates as a decentralized compute network that aggregates idle computational resources from various providers, including former crypto miners. The platform enables users to access affordable and scalable compute power for a wide range of applications, from scientific research to machine learning and AI. By decentralizing the provisioning of compute resources, Octa reduces costs and increases accessibility for users across the globe.

Dynex: Dynex is another decentralized platform that aims to capture unused computational power for specialized applications, such as artificial intelligence (AI) and machine learning (ML). By connecting idle GPUs from former crypto miners, Dynex provides a distributed network for running complex computations at a lower cost than traditional cloud providers. This not only gives miners a new revenue stream but also democratizes access to high-performance computing resources.

Benefits of Leveraging Unused Compute Power

Cost Efficiency: Decentralized platforms offer compute power at a significantly lower cost compared to traditional centralized cloud providers. By capturing unused resources, these platforms can provide affordable options for developers, researchers, and businesses looking to run compute-intensive tasks without incurring high costs.

Environmental Impact: Repurposing idle hardware for decentralized computing helps mitigate the environmental impact of crypto mining. Instead of letting powerful GPUs go to waste, these resources are put to productive use, reducing the need for additional energy consumption associated with manufacturing new hardware.

Increased Access to Resources: Decentralized compute platforms democratize access to high-performance computing by making it available to a broader audience. Researchers, small businesses, and individual developers can access the computational power they need without relying on expensive, centralized cloud services.

Security and Resilience: By distributing compute resources across a decentralized network, these platforms enhance security and resilience. Decentralization reduces the risk of downtime and data breaches, as there is no single point of failure that can be targeted by attackers.

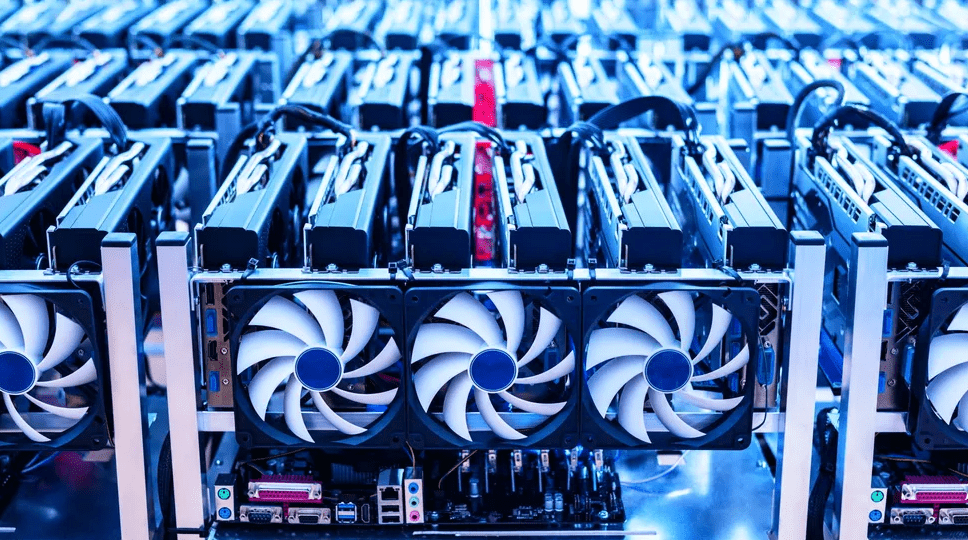

Unleash the Power of AI with Chainsavvy HPC Systems

Introducing Chainsavvy‘s advanced HPC system (codename: APEX), meticulously designed to meet the escalating demands of AI and machine learning workloads. Our solution is engineered to accelerate the most intensive computational tasks, ensuring unparalleled performance and reliability. Built upon rigorous testing and proven technology, Chainsavvy‘s HPC systems empower businesses to streamline their operations and achieve groundbreaking results in AI-driven applications. Whether tackling complex simulations, deep learning algorithms, or big data analytics, our systems deliver unmatched efficiency and scalability, making Chainsavvy the trusted choice for cutting-edge technological solutions.

Experience the difference!

- Simplified Decision Making: With the introduction of two tier: Apex BASE and Apex MAX – Chainsavvy eliminates complexity and research, empowering clients to focus on what truly matters.

- Rigorous Stress Testing and Comprehensive Build Documentation: Each Chainsavvy product undergoes meticulous stress testing and comes with detailed build analysis, ensuring unparalleled reliability and performance.

- Preconfigured System: Enjoy a true plug-and-play experience with Chainsavvy‘s preconfigured operating system for defined marketplace, making setup effortless from the moment you unpack.

- Lifetime Technical Support: We stand by you with lifetime technical support, ensuring assistance is just an email away whenever you need it.

- Legendary Customer Service: Discover Chainsavvy‘s renowned customer service through our glowing testimonials – experience our dedication firsthand.

- Unbeatable Pricing: Chainsavvy offers competitive pricing without compromising on quality.

- Proudly Built and Shipped from the USA: Designed with a commitment to supporting American small businesses, every Chainsavvy system is proudly built and shipped from the USA.

- Future-Proofing: All Chainsavvy systems are fully upgradable, ensuring your investment remains relevant and adaptable for years to come.

- Field-Replaceable Components: With a modular design and hot-swappable components, Chainsavvy systems allow for easy field replacement of parts, minimizing downtime.

- Onsite Deployment Assistance: For those seeking a white-glove approach, Chainsavvy offers onsite deployment assistance, ensuring seamless installation and setup.

Ready to revolutionize your AI capabilities? Connect with our experts to explore how Chainsavvy‘s APEX HPC system can elevate your projects. Reach out today, and let’s build the future together!

If you found this article informative, please share it with your network to spread the word about the latest advancements in AI and the powerful solutions Chainsavvy has to offer.

#AIRevolution #TechInsights #ChainsavvyPower

References

- Shacknews. (2024). “NVIDIA CEO Jensen Huang Says AI Is at an Inflection Point.” Link to source

- Apple. (2024). “Private Cloud Compute.” Link to source

- InData Labs. (2024). “AI Adoption by Industry.” Link to source

- Bloomberg. (2024). “Generative AI to Become a $1.3 Trillion Market by 2032, Research Finds.” Link to source

- Why of AI. (2024). “AI Explained.” Link to source

- Crystal Rugged. (2024). “What is PCIe Slots, Cards, and Lanes?” Link to source

- Synoptek. (2024). “AI, ML, DL, and Generative AI Face-Off: A Comparative Analysis.” Link to source

- Teslarati. (2024). “Tesla Not AI Training Compute-Constrained: Elon Musk.” Link to source

- Tom’s Hardware. (2024). “Tesla’s $300 Million AI Cluster is Going Live Today.” Link to source

- Wikipedia. (2024). “LLAMA (Language Model).” Link to source

- Cloudflare. (2024). “What is a Large Language Model?” Link to source

- Scaler. (2024). “Artificial Intelligence Tutorial: Subsets of AI.” Link to source

- AWS. (2024). “Decentralization in Blockchain.” Link to source

- AIMultiple. (2024). “AI Use Cases.” Link to source

- DataCamp. (2024). “What is AI: Quick Start Guide for Beginners.” Link to source

- Tom’s Hardware. (2024). “Elon Musk Buys Tens of Thousands of GPUs for Twitter AI Project.” Link to source

- Puget Systems. (2024). “Impact of GPU PCI-E Bandwidth on Content Creation Performance.” Link to source

- ValueCoders. (2024). “Latest AI: 10 Breakthroughs Redefining Industries.” Link to source

- CIO. (2024). “Private Cloud Makes Its Comeback, Thanks to AI.” Link to source